Recent news

Thu, Jan 21, 2026, Radoslav Skoviera presented at Roboty 2026

Fri, Oct 30, 2025, Karla Stepanova received Junior STAR project

Thu, Sep 18, 2025, Presentations in Týden pro Digitální Česko

Tue, Aug 28, 2025, New RAL paper – ILeSiA: Interactive Learning of Robot Situational Awareness from Camera Input

Tue, July 7-9, 2025 ELLIOT Kick-off and press release

Thu, June 26, 2025 Ondrej Holesovsky defended his PhD thesis

Thu, June 19, 2025 New TACR project – Eduardis

Mon, June 5, 2025 New RAL paper Interactive robotic cable segmentation

Thu, May 25, 2025 Keynote talk at Cognition and Artificial Life, Slovakia

Tue, May 6, 2025 Gabriela Sejnova defended her PhD thesis

Wed, Apr 9, 2025 Martin Matousek presented at KRF 2025

Thu, Mar 20, 2025 Marina Ionova’s Diploma Thesis Wins Werner von Siemens Prize

Thu, Mar 13, 2025 Finished Industrial Project: Automated Trajectory Planning for Robotic Plastic Tank Welding

Wed, Feb 12, 2025 Two Papers from Our Group Accepted to ICRA 2025 and RAL

Radoslav Skoviera presented our HRI research at Roboty 2026

Radoslav Skoviera from our group presented our work on human–robot interaction at the industrial conference Roboty 2026 (talk titled: Robot, AI a člověk). The presentation focused on what it really means for robots to understand humans: from natural language and nonverbal communication to environmental perception, adaptability to users, and safe responses to unexpected situations. Alongside applied projects such as an automated pallet jack and robotic pipe exploration, Radoslav introduced our long-term research vision for modular, robust, and explainable human–robot collaboration systems that combine classical robotics methods with modern AI.

The presented research is developed within national and European projects (GAČR, TAČR, OP JAK Roboprox, EU ELLIOT) and in collaboration with industry partners including Factorio Solutions and the startup Robotwin. From 2026 onward, this work will be further supported by the GAČR Junior STAR project focused on personalizable robots capable of adapting to different tasks, environments, and users through interactive dialogue.

From the discussion during and after the talk it was visible, that there is still limited level of trust Czech companies place in large language models and natural human–machine interfaces—and therefore a significant potential to move this area forward.

More about the conference: https://konference-roboty.cz/

Karla Stepanova received Junior STAR project

Karla Štěpánová from our group has been awarded the prestigious JUNIOR STAR grant by the Czech Science Foundation (GA ČR).

As the head of the Robotic Perception Group at CIIRC, she succeeded with her five-year project PersonalRobot: Customizing Robots via Multimodal Interactive Human–Robot Dialogues. The project targets one of the major goals of modern robotics: creating robots that learn similarly to junior employees—through observation, explanation, and interactive dialogue.

Karla Štěpánová’s research bridges artificial intelligence, machine learning, and robotics. It has the potential to open new opportunities for deploying robots in small-scale manufacturing, social care, healthcare, and everyday life.

💬 “Receiving the grant is not only my achievement. I would like to thank CIIRC CTU and everyone who helped me prepare the proposal and provided valuable feedback. I am very happy, but I also feel a strong responsibility to use the funding as effectively as possible to contribute to Czech science,” says Karla Štěpánová.

👏 Congratulations! We are looking forward to results that will move Czech science and robotics another step forward.

More information https://www.ciirc.cvut.cz/cs/karla-stepanova-ziskala-prestizni-grant-ga-cr-junior-star-2026-na-vyzkum-personalizovanych-robotu/

Presentations in Týden pro Digitální Česko

18.9.2025

Our colleagues Julia Škovierová, Vladimír Smutný, and Radoslav Škoviera shared their research in the TV program: Digitální Česko (Úterní snídaně v Týdnu pro Digitální Česko, Čtvrteční snídaně v Týdnu pro Digitální Česko).

You can watch the full video on YouTube: Julia at ~55:00 , Vladimír at ~1:10:00, and Radoslav at ~1:28:00.

New RAL paper – ILeSiA: Interactive Learning of Robot Situational Awareness from Camera Input

26.8.2025

The robots are now situationally aware and able to detect risky situations based on both seen and unseen image data observed during robotic executions. This project was a collaboration with the Technical University of Delft, Netherlands. See our recent paper by Petr Vanc, Giovanni Franzese, Jan Kristof Behrens, Cosimo Della Santina, Karla Stepanova, Jens Kober, and Robert Babuska, published in IEEE RAL: https://ieeexplore.ieee.org/document/11130915

ELLIOT Kick-off and press release

7.7.2025

Would you like to see Europe leading in AI research and developing its own powerful multimodal model? So would we. The next four years will be challenging, but let’s hope we can achieve this. Project ELLIOT, launching this month and involving 32 partners, is setting ambitious goals to realize this vision — see the official press release for details.

We’re proud to be part of this consortium, representing the Czech side alongside the IMPACT Group and industrial partner RoboTwin s.r.o., focusing on the robotic perception use case.

The Czech press release provides a brief overview of the contributions our teams at CIIRC, Czech Technical University in Prague, are expected to make.

ELLIOT officially kicked off just a few days ago, and Karla Štěpánová from our Robotic Perception Group and Vladimír Petrík from the IMPACT Group are currently attending the kick-off meeting in Thessaloniki.

📰 The Czech press release: https://www.ciirc.cvut.cz/elliot-a-flagship-initiative-to-develop-open-multimodal-foundation-models-for-robust-ai-in-the-real/

📰 The official press release: https://apigateway.agilitypr.com/distributions/history/52135e0b-9025-48a8-9120-2490054de249

Ondřej Holešovský defended his PhD thesis

26.6.2025

His thesis “Interactive Robotic Perception of Cable-like Deformable Objects” can be found here

Supervisors: prof. Václav Hlaváč, Dr. Radoslav Škoviera

Google scholar profile: https://scholar.google.cz/citations?user=n76c7b8AAAAJ

Some of his most relevant papers:

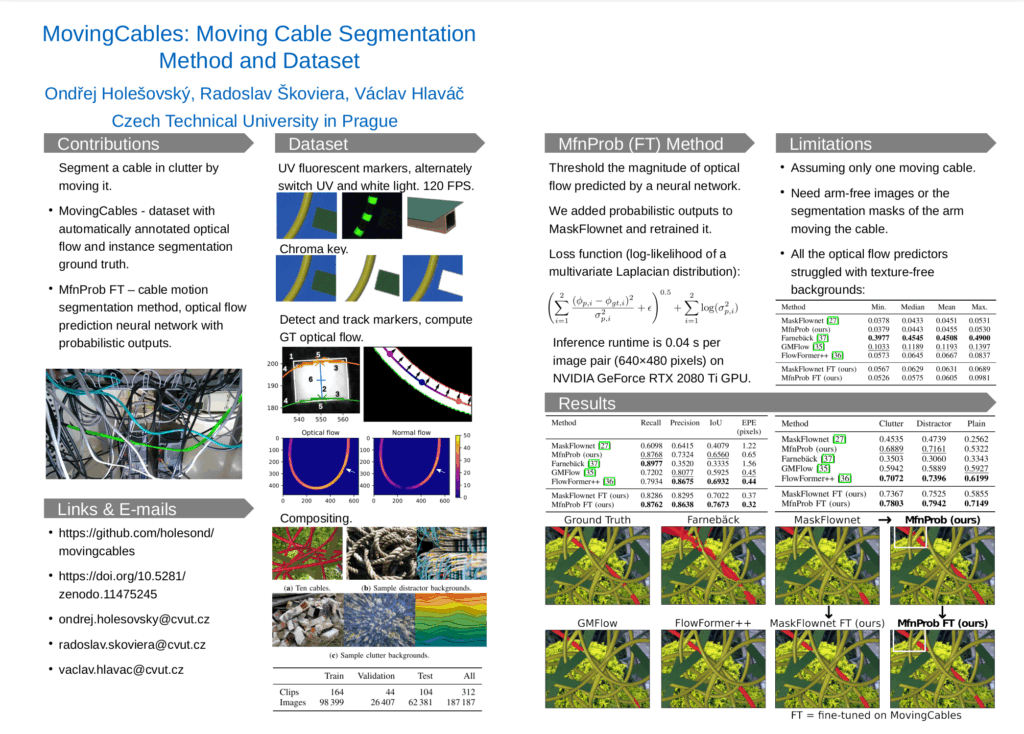

- Holešovský, Ondřej, Radoslav Škoviera, and Václav Hlaváč. “Interactive Robotic Moving Cable Segmentation by Motion Correlation.” IEEE Robotics and Automation Letters (2025).

- Holešovský, Ondřej, Radoslav Škoviera, and Václav Hlaváč. “MovingCables: Moving Cable Segmentation Method and Dataset.” IEEE Robotics and Automation Letters (2024).

- Holešovský, Ondřej, et al. “Experimental comparison between event and global shutter cameras.” Sensors 21.4 (2021): 1137.

New TACR project – Eduardis – Artificially intelligent art teacher

19.6.2025

Our collaborative effort with the University of Hradec Králové has been selected for funding by TACR Sigma, standing out among 47 successful proposals out of 267 submissions — a competitive 17.6% success rate.

This unique and exciting project will apply computer vision techniques to automatically evaluate student artworks — not only drawings, but also sculptures — by comparing them against teacher guidelines and example works.

- Principal Investigator:

University of Hradec Králové – Milan Salák - CTU Lead:

Júlia Skovierová (ROP, CTU) - Starting date: 1.9.2025

Project Objective:

To develop a web-based application that can assess the relevance of students’ creative processes across 48 specific continuous art tasks. These tasks span traditional imaging techniques in drawing, painting, and sculpture, and are designed to align with the educational progression in art instruction.

A parallel goal is to adapt task assignments based on the technical capabilities of the developed system, ensuring meaningful remote evaluation in visual arts education.

New RAL paper – Motion correlation method to enable robot to interactively segment cables

5.6.2025

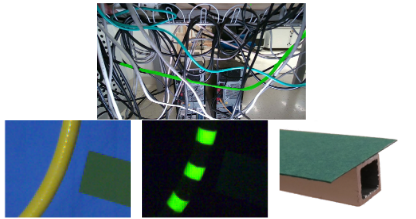

🚀 𝗧𝗮𝗻𝗴𝗹𝗲𝗱 𝗰𝗮𝗯𝗹𝗲𝘀, 𝗵𝗼𝘀𝗲𝘀, 𝗼𝗿 𝗿𝗼𝗽𝗲𝘀? Robots can now handle them better – even in cluttered environments. See the recent paper by Ondrej Holesovsky, Radoslav Skoviera, and Vaclav Hlavac published in IEEE RAL 👉 https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=11017607

Our goal is i𝗻𝘁𝗲𝗿𝗮𝗰𝘁𝗶𝘃𝗲 𝗿𝗼𝗯𝗼𝘁𝗶𝗰 𝗽𝗲𝗿𝗰𝗲𝗽𝘁𝗶𝗼𝗻 for real-world deformable object manipulation.

In our latest work, we:

🔹 Combine visual and proprioceptive sensing to 𝘀𝗲𝗴𝗺𝗲𝗻𝘁 𝗴𝗿𝗮𝘀𝗽𝗲𝗱 𝗰𝗮𝗯𝗹𝗲𝘀 𝗱𝘂𝗿𝗶𝗻𝗴 𝗺𝗼𝘁𝗶𝗼𝗻

🔹 Introduce the 𝗠𝗼𝘁𝗶𝗼𝗻 𝗖𝗼𝗿𝗿𝗲𝗹𝗮𝘁𝗶𝗼𝗻 (𝗠𝗖𝗼𝗿) 𝗺𝗲𝘁𝗵𝗼𝗱 – no robot arm segmentation masks needed

🔹 Propose a 𝗻𝗼𝘃𝗲𝗹 𝗴𝗿𝗮𝘀𝗽 𝘀𝗮𝗺𝗽𝗹𝗶𝗻𝗴 𝘀𝘁𝗿𝗮𝘁𝗲𝗴𝘆 to enhance segmentation through interaction

🔹 Validate the approach on a physical robotic platform

📄 𝗥𝗲𝗮𝗱 more in the 𝗜𝗘𝗘𝗘 𝗽𝗮𝗽𝗲𝗿 👉 https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=11017607

🔗 Also check out 𝗼𝘂𝗿 𝗿𝗲𝗹𝗮𝘁𝗲𝗱 𝘄𝗼𝗿𝗸 𝗼𝗻 𝘁𝗵𝗲 𝗠𝗼𝘃𝗶𝗻𝗴𝗖𝗮𝗯𝗹𝗲𝘀 segmentation baseline and dataset (RAL): https://ieeexplore.ieee.org/document/10563988

Citation: O. Holešovský, R. Škoviera and V. Hlaváč, “Interactive Robotic Moving Cable Segmentation by Motion Correlation,” in IEEE Robotics and Automation Letters, vol. 10, no. 7, pp. 7420-7427, July 2025, doi: 10.1109/LRA.2025.3574960.

Keynote talk at Cognition and Artificial Life, Slovakia

22.5.2025

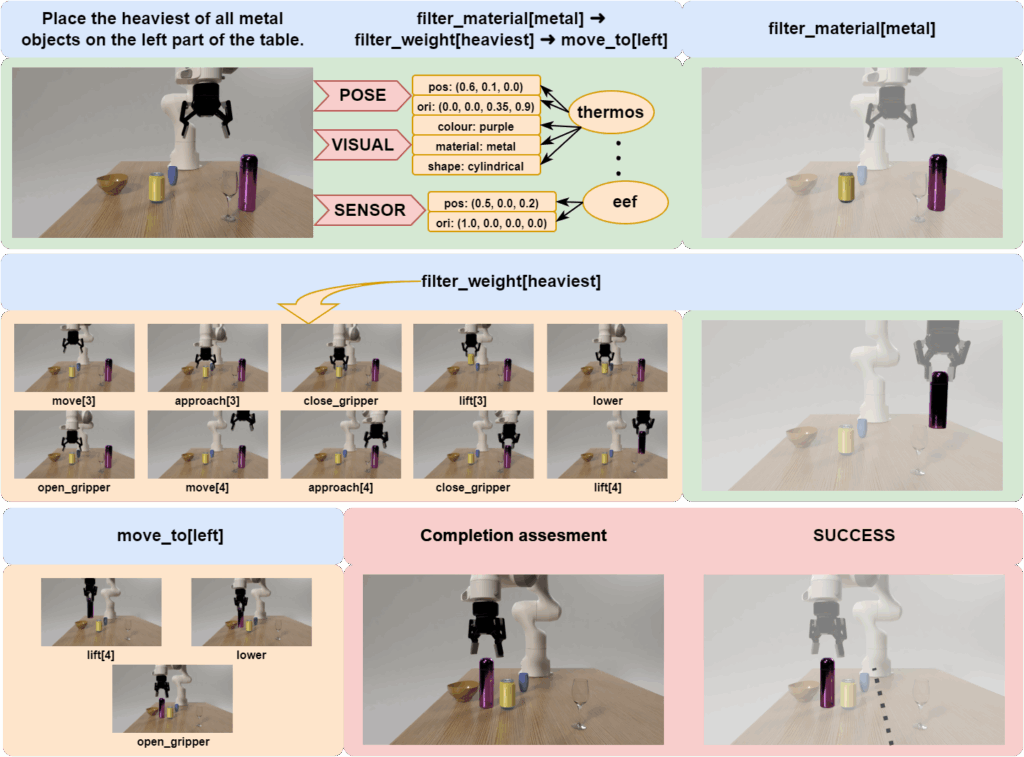

Karla Stepanova had a keynote talk at the conference Cognition and Artificial Life in Slovakia.

As robotics continues to expand into dynamic and small-batch production settings, the need for intuitive and flexible task specification is becoming increasingly important. This talk presents a novel approach to natural task specification, enabling rapid task definition and deployment without the burden of extensive programming. By integrating human demonstrations, language, and gestures, we create more accessible and adaptable ways to define task parameters and constraints. Additionally, we explore robust task representation methods that structure these specifications for easy transfer across different robotic setups and enable fluent transformation into executable robot plans. This approach also enhances adaptability to new environments and represents a significant step toward more human-centric, flexible robotic systems—bringing us closer to truly natural collaborative automation.

📄 Slides: KUZ 2025 – Task specification for flexible robotics – PDF slides

Conference website: KUZ/CAL 2025

Gabriela Šejnová defended her PhD thesis!

6.5.2025

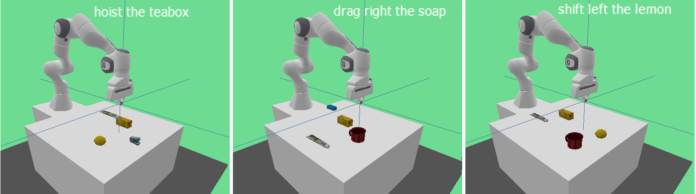

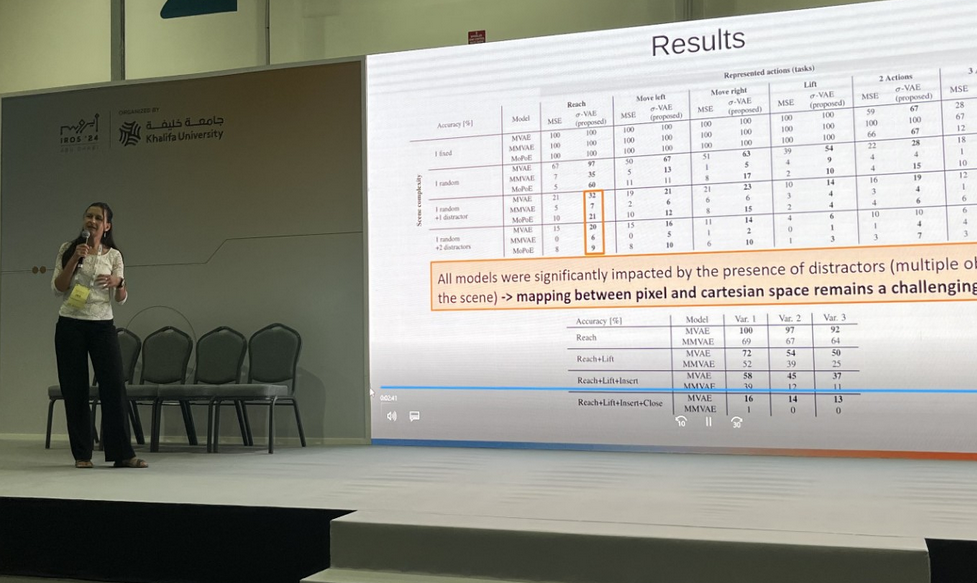

Her thesis “Multimodal variational autoencoder for instruction-based robotic action generation” can be found here

Supervisors: Dr. Michal Vavrecka, Dr. Karla Stepanova

Scholar profile: link

Some of her most relevant papers:

- Bridging Language, Vision and Action: Multimodal VAEs in Robotic Manipulation Tasks (IROS 2024) –

- Imitrob: Imitation Learning Dataset for Training and Evaluating 6D Object Pose Estimators (RAL 2023)

- Feedback-Driven Incremental Imitation Learning Using Sequential VAE (ICDL 2022)

- Reward Redistribution for Reinforcement Learning of Dynamic Nonprehensile Manipulation (ICARR, 2021)

- Exploring logical consistency and viewport sensitivity in compositional VQA models (IROS 2019)

Martin Matoušek Presented at the KRF 2025 Conference

At the 13th Conference on Radiological Physics, held April 8–10, 2025, in Srní, Czech Republic, Martin Matoušek delivered a presentation titled “Tool for Automatic Evaluation of Radiographic Calibration Phantom Images”, co-authored with Václav Hlaváč. Presenting their work from EDIH CTU project in cooperation with Motol Hospital

The presented tool leverages methods from digital image processing and computer vision to perform automatic quantitative analysis of radiographic calibration phantoms. It supports visual inspection of results, generates exportable XLS reports including measurements and graphs, and offers user-friendly outputs to assist operators during image evaluation. Originally developed for the Department of Medical Physics at FN Motol, the tool holds potential for adaptation to other phantom types.

🔗 More about the conference: csfm.cz – Conference 2025

Slides (in Czech): KRF 2025 talk – pdf (CZ)

Marina Ionova’s Diploma Thesis Wins Werner von Siemens Prize

20.3.2025

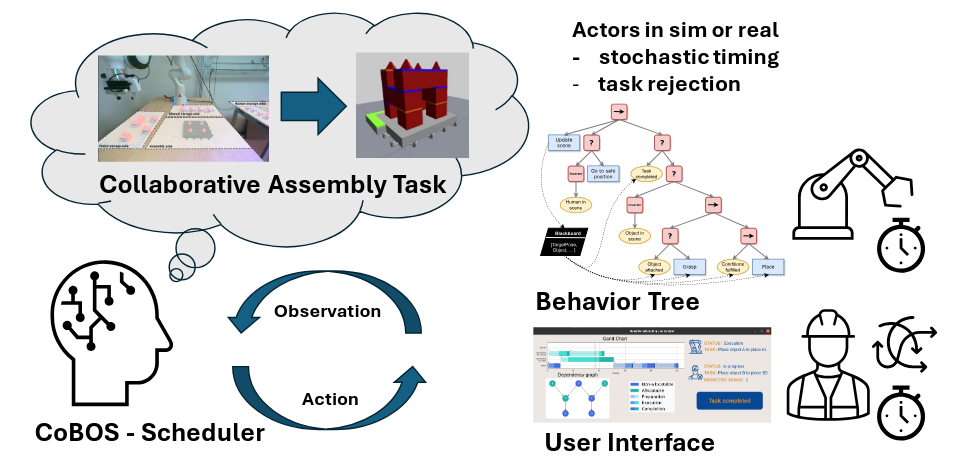

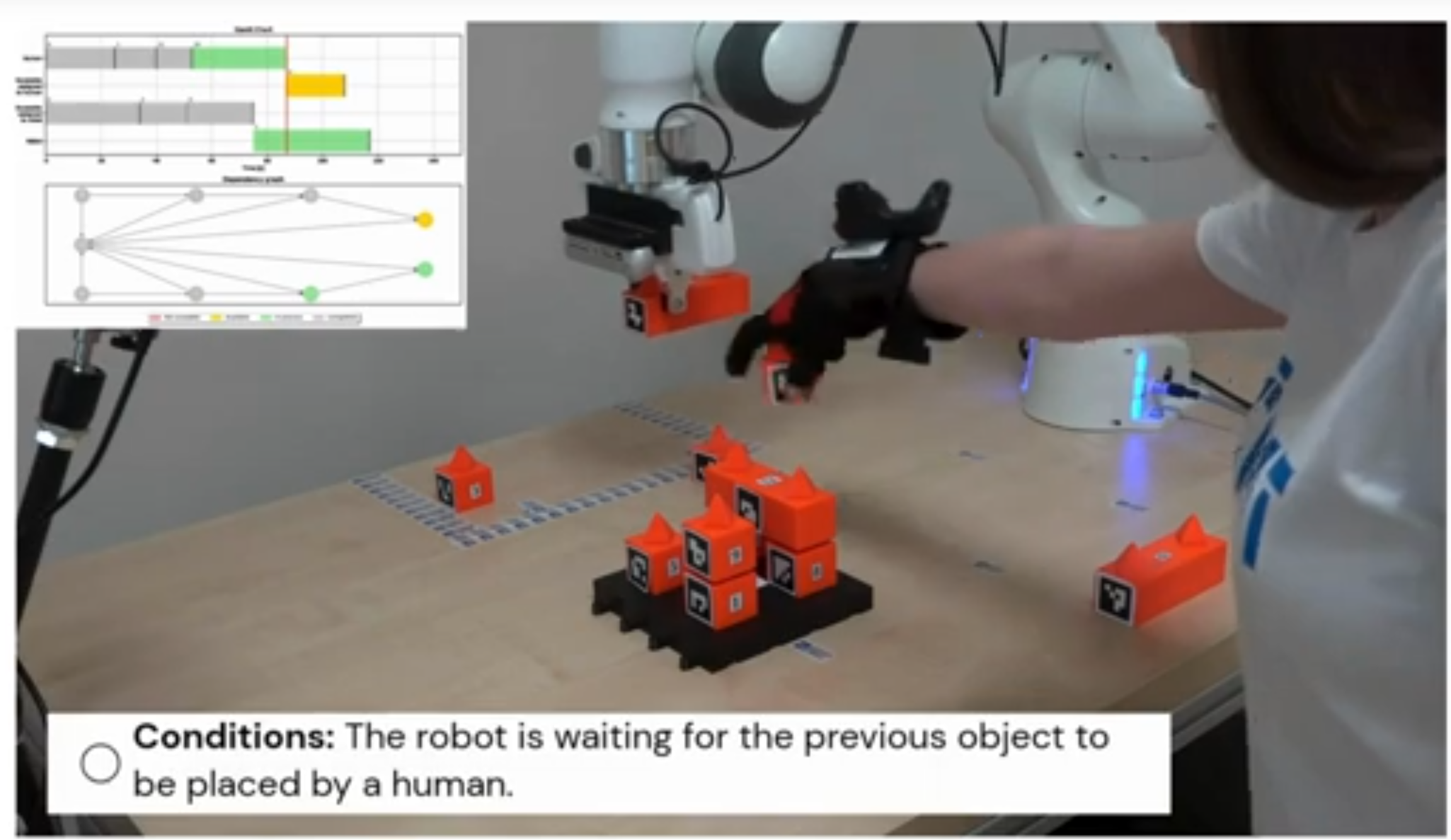

Marina Ionova’s master’s thesis has been honored with both the Werner von Siemens Prize for the Best Master Thesis in Industry 4.0 and the Special Jury Award for Outstanding Quality of Female Scientific Work.

Under the supervision of Dr. Jan Kristof Behrens from our Robotic Perception Group, Marina tackled one of the most intricate challenges in modern manufacturing: achieving truly seamless collaboration between humans and robots in dynamic production settings. By combining constraint‑based programming with behavior trees, her approach skillfully handles the uncertainty and non‑deterministic nature of human actions, paving the way for more adaptable and intuitive human‑robot teamwork on the factory floor.

We extend our heartfelt congratulations to Marina for this outstanding achievement and are proud that our group’s mentorship contributed to such impactful, forward‑thinking research.

Finished Industrial Project: Automated Trajectory Planning for Robotic Plastic Tank Welding

13. 3. 2025

Our Robotic Perception Group has successfully completed an industrial project focused on automating trajectory planning for a robotic welding cell used in plastic tank production. The project addressed a key bottleneck in small-batch manufacturing, where manual trajectory programming for each setup is inefficient and costly. By automating the process, our system generates an optimized robot trajectory based on: 1) A digital model of the welding cell, 2) The tank to be welded, and 3)The specified weld seams

With 9 degrees of freedom, trajectory planning becomes a complex optimization problem with multiple constraints. Our solution enables flexible, efficient deployment without manual intervention.

Team: Vladimír Smutný (lead), Pavel Krsek, Matěj Vetchý, Tomáš Fiala, and others.

Our Partners include TAČR project (2021–2023): ALAD CZ, triotec s.r.o., and STP plast. This project was supported by the Technologická agentura ČR and EDIH CTU.

Two Papers from Our Group Accepted to ICRA 2025 and RAL

12.2.2025

We’re proud to announce that two papers from our Robotics Perception Group have been accepted for presentation at ICRA 2025, with one also published in the RAL journal.

📅 Catch both presentations at ICRA 2025, May 19–23 in Atlanta, GA. We look forward to sharing our work and connecting with the robotics community!

📄Closed-loop Interactive Embodied Resoning for Robot manipulation

In collaboration with Imperial College London, this work explores how robots can dynamically adapt their actions using interactive perception (e.g., weighing, measuring stiffness) and neurosymbolic AI. Robots adjust at three levels—physical, action, and knowledge—based on real-time feedback during manipulation.

🤖 Authors: Michał Nazarczuk, Jan Behrens, Karla Štěpánová, Matej Hoffmann, Krystian Mikolajczyk

More about the paper: https://lnkd.in/ea2bgNiJ

🔗 Learn more:

🔗 ArXiv: https://lnkd.in/eksYzx-b

🌐 Project website https://lnkd.in/e9R5tgjj

📽️ YouTube video: https://lnkd.in/e2UB7BZs

🤖 In this work, we explore how robots can continuously refine their actions while executing a task, dynamically adapting to new information utilizing 𝗻𝗲𝘂𝗿𝗼𝘀𝘆𝗺𝗯𝗼𝗹𝗶𝗰 𝗔𝗜 𝗶𝗻 𝗰𝗼𝗺𝗯𝗶𝗻𝗮𝘁𝗶𝗼𝗻 𝘄𝗶𝘁𝗵 𝗶𝗻𝘁𝗲𝗿𝗮𝗰𝘁𝗶𝘃𝗲 𝗽𝗲𝗿𝗰𝗲𝗽𝘁𝗶𝗼𝗻 (e.g., weighing, measuring stiffness, etc.).

During task execution, our robot adjusts its actions based on feedback at three levels:

1️⃣ Physical level – If the object moves while the robot is grasping it, the trajectory must be adjusted.

2️⃣ Action level – If part of an assembly is disassembled, the robot must replan to achieve the goal.

3️⃣ Knowledge level – The robot updates its plan based on new observations (e.g., learning an object’s weight).

This is work with Michał Nazarczuk, Jan Behrens, Karla Štěpánová, Matej Hoffmann and Krystian Mikolajczyk

Authors: Michał Nazarczuk, Jan Behrens, Karla Štěpánová, Matej Hoffmann, Krystian Mikolajczyk

🔗 ArXiv | Project Website | YouTube Demo

📄MovingCables: Moving Cable Segmentation method and dataset

🤖 Authors: Ondrej Holesovsky, Radoslav Škoviera, Vaclav Hlavac

📘 Published in: IEEE Robotics and Automation Letters (RAL) – [PDF from IEEE]

🗓️ Thursday, May 22, 2025

⏰ 16:55 – 17:00

📍 Paper ThET15.5, Datasets and Benchmarking session

O. Holešovský, R. Škoviera and V. Hlaváč, “MovingCables: Moving Cable Segmentation Method and Dataset,” in IEEE Robotics and Automation Letters, vol. 9, no. 8, pp. 6991-6998, Aug. 2024, doi: 10.1109/LRA.2024.3416800.

Presentation for students from Charles university

8.1.2025

An interesting discussion on the ethical aspects of AI and autonomous robotics arose during our recent demo for students from Charles University. One particularly thought-provoking question explored whether affordable humanoid robots might soon be available for household use, amateur development, and the broader implications this might have for robotics in the context of widespread adoption of LLM, VLM, and VLA models.

Other questions delved into autonomous cars and the types of errors they might encounter, why Elon Musk insisted on using only visual sensors in Teslas, or the diverse applications for gesture-based robotics (research focus of our PhD student Petr Vanc) that range from operating in environments inaccessible due to radiation or stringent hygiene standards, to long-distance communication like controlling drones from the ground, and even to household robots and natural human-robot interaction.

Welcoming students from the Faculty of Humanities at Charles University, as part of their course “Artificial Intelligence from the Perspective of Humanities,” was truly a pleasure. I’m happy that our Ph.D. student, Karina Zamrazilová, who teaches this course, had the great idea of hosting one of their lessons at our institute.

Connecting people from diverse fields is a key part of our group’s philosophy. Interesting research questions often arise at the intersection of disciplines when we step outside the confines of a single perspective.

If you’re curious about what we do in our group and would like to try some of our demos—such as operating a robot through gestures or language, conducting an robotic experiment in VR, or exploring our more industrial activities like brick-laying robot—don’t hesitate to reach out. We’d be happy to arrange something for you!

Esej in Academix revue by prof.Hlaváč

6.1.2025

An essay by Prof. Vaclav Hlavac on the challenges of visual perception in robotics can be found in the current issue (4/2024) of Academix Revue (in Czech), along with several other texts from members of our institute.

How about you? Do you find opportunities to share your work with broader audiences?

How often do you write for the general public? What benefits do you see in it?

Publishing not only for the scientific community but also for the general public should be an essential task for every researcher. While it may not directly contribute to their h-index, it often has a far greater impact on society as a whole.

XMas party of the group

18.12.2024

Visit of a group from MIAS CTU (future technical economs)

13.12.2024

We had a nice visit of a group from MIAS CTU (Masaryk institute of advanced studies, future technical economs) within the subject Introduction to robotics led by prof.Stepankova. They came to see our human-robot collaboration setup.

Our robots at Czech TV – Wifina

25.11.2024

Our robots were presented by Karla Stepanova, Petr Vanc and Libor Wagner for kids programme Wifina at Czech TV. Kuba who visited us tried to teleoperate the robot similarly to how surgeons do it. He also tried to operate it just by gestures.

Are you also interested why we call our robots “panda” or “capek”?

Or how are robots listening to us?

You can see the video online from 2:30 here: Wifina – 27.11.2024

ATHENS Programme Students Visit at ROP, CTU CIIRC

22. 11. 2024

Students from the ATHENS Programme (organized by Prof. Procházka) recently visited our group as part of their trip to the Czech Technical University’s Czech Institute of Informatics, Robotics, and Cybernetics (CIIRC). The event featured presentations that connected theoretical concepts with significant practical applications in computational intelligence. The students toured three laboratories: the Intelligent and Mobile Robotics Group, the Big Data and Cloud Computing Lab, and our Robotics Perception Group. We showcased our collaborative human-robot workplace, force-torque compliant robots, and an automated palletizer for a brick-laying robot. Participants appreciated the experience, finding it motivating for their future studies.

IROS 2024 – Abu Dhabi

18. 10. 2024

This year we presented at IROS in Abu Dhabi (14.-18.10.2024) two scientific papers from our group.

Scientific paper entitled „Bridging Language, Vision and Action: Multimodal VAEs in Robotic Manipulation Tasks“ by Gabriela Šejnová, Karla Štěpánová and Michal Vavrečka addresses a key topic in the development of intelligent autonomous robots. The researchers focus on how to teach robots to perform complex manipulation tasks based on language commands, visual perception and demonstrated movements. The goal of the research is to achieve a higher level of autonomy for robots that can recognise different objects and perform new tasks with them based on linguistic instructions, without the need for pre-programming. Article about the paper: CIIRC web. Full article here: ArXiv, Video.

Second paper entitled CoBOS: Constraint-Based Online Scheduler for Human-Robot Collaboration by Marina Ionova and Jan Kristof Behrens proposes a novel approach of online constraint-based scheduling of tasks between human and robot. The reactive execution control framework facilitates behavior trees and is called CoBOS. It allows the robot to adapt to uncertain events such as delayed activity completions and activity selection (by the human). Full article here: ArXiv, youtube.

Researcher’s night 2024

27. 9. 2024

Our group took part in Researcher’s night. Vladimir Smutny, Pavel Krsek and Mira Uller presented their automated palletizer and Rado Skoviera and Karla Stepanova the human-robot collaboration workplace. Both of the presentations were very successful. Only issue was that after transferring microphone between individual people it got soon broken so we could not let kids command the robot by themselves. But they still had a lot of fun.